5. September 2018

Load Balancing NAV/BC in Docker Swarm

Tobias already explained how we can easily load balance the NAV Webclient via traefik. Load balancing the Windows client is a bit more complicated…

TL;DR

NAV uses TCP connections with a proprietary protocol for the Windows client communication and HTTP connections for the webclient and the webservices. Also the Windows and webclient require sticky sessions in dynamically scaled environments. These requirements as well as the limited choice of available load balancers with support for Docker Swarm and Windows containers make it hard to find a usable solution for all client types of NAV.

In the end we implemented a custom solution to fulfill all those requirements and also supports Windows authentication via gMSAs. You can find an example Docker stack and a quickstart guide in the GitHub repository here.

Requirements

Let’s first have a look at the communication of the different NAV clients and the needed load balancing mechanisms for them:

– The WebClient uses multiple stateful HTTP connections at a time which should reach the same NAV instance. Hash-based load balancing e.g. with the source port or source IP isn’t sufficient because the source port changes with each connection of the same client and one source IP could be shared between multiple clients in a NATed environment. The only solution here is cookie-based HTTP load balancing which is also used by traefik like Tobias described here.

– The Windows client only uses a single TCP connection with a proprietary protocol on top. The connection is kept alive for the whole session. Therefore round-robin load balancing at TCP level is enough here as the kept-alive connections are only load balanced once.

– The Webservices (SOAP and OData) use simple stateless connections so round-robin load balancing on TCP layer can be used here as well.

Searching for existing solutions

I first looked into the solutions supporting the Docker API to get information about the services for dynamic load balancing. Tobias already introduced Traefik in the last blog post which is great but unfortunately doesn’t support TCP load balancing. Most of the other existing „swarm-aware“ load balancers I looked at don’t work on Windows. If they did work, they hadn’t support for sticky sessions/cookie-based load balancing and/or TCP load balancing. I even tried setting up a heterogenous cluster with Linux and Windows nodes to run these existing solutions in a Linux container, but often had trouble with the discovery of services running on Windows nodes.

This started to be more complex than I initially thought. I actually didn’t want to use traditional service registries like e.g. Consul as this would introduce even more complexity and Docker swarm already got all the information the load balancer needs. Such a registry would also require e.g. a script in the NAV image for registering itself or another external service which saves service information gathered from the Docker API into the service registry.

I also found some popular projects for dynamically (re-)generating an nginx config based on information from the Docker API. Unfortunately the open source variant of nginx natively doesn’t support cookie-based load balancing so that this solution doesn’t fit for our use case either.

In the end I decided to create a custom solution and use the information already stored inside the swarm itself. I found that the integrated DNS server of Docker could be used for retrieving the IPs of the service instances. This means that the Docker API doesn’t even have to be exposed to the load balancer what also improves the security a bit. The DNS server respects the health of the services as well and quickly updates the information accordingly.\

This design now requires a load balancer which can resolve hostnames via a DNS server and use the IPs for load balancing. Many load balancing solutions like nginx support this, but they can’t dynamically re-resolve hostnames during runtime to allow for failover and dynamically scaling of the backend services.

I decided to use OpenResty as a load balancer. OpenResty is a platform for extending nginx via various libraries. This way it was pretty easy to implement service discovery via DNS with dynamic updates as well as cookie-based load balancing. You can find this custom load balancer here. It can now be configured to allow load balancing of NAV.

Load balancing NAV

The general usage of the load balancer as well as an example setup and a demonstration of the load balancing can be found here. Also have a look at the nginx.conf in this repository to get an idea of the configuration. This configuration doesn’t differ much from what you would find in a normal nginx configuration and is mostly self-explanatory.

The example setup for load balancing NAV can be found in this repository. For deploying the example Docker stack, have a look at the Readme.

The nginx.conf of the load balancer was adapted to define upstreams and servers for all different NAV clients as well as the ClickOnce endpoint. The upstreams use the load balancing mechanisms explained above.

For the NAV image I’ve overwritten the interval of the healthcheck to allow the load balancer to react more quickly to scaling or failover of the NAV service.

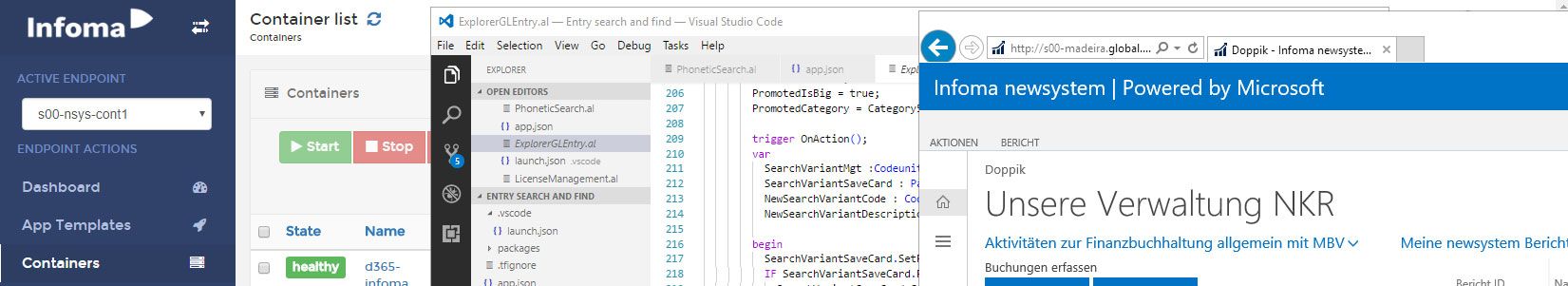

The deployment is done via docker stack deploy --compose-file docker-stack.yml mystack. This uses the docker-stack.yml file where the load balancer, NAV and the SQL server are defined as services. The hostname of the NAV service should be set as an environment variable UP_HOSTNAME of the load balancer. This hostname is resolved at runtime.

The NAV service uses the defined SQL server service as an external database and also activates ClickOnce. Also make sure to set the PublicDnsName of the NAV service to the hostname of the swarm node running the load balancer for the Windows client as well as the ClickOnce deployment to work properly. The NAV service also uses a Docker secret for the passwordKeyFile used for decrypting the passwords. The endpoint_mode: dnsrr is the only mode currently supported on Windows and allows resolving the hostname of the NAV service to all IPs of existing instances.\

The SQL server automatically attaches the database files specified in the attach_dbs environment variable. I just copied out these database files from a stopped NAV container.

Verifying the load balancing

Let’s now have a deeper look into how the load balancing works. To verify the service discovery via DNS we can do an nslookup inside the load balancer container:

PS C:\> docker exec 3ef6e260f7e4 nslookup nav

Non-authoritative answer:

Server: UnKnown

Address: 10.0.1.1

Name: nav

Addresses: 10.0.1.8

10.0.1.11

This returns all IPs of all existing and healthy NAV service instances. If we scale the NAV service, those IPs are updated almost immediately. The load balancers re-resolves the hostname according to the TTL specified in the nginx.conf.

If we now connect multiple Windows and webclients we can verify the load balancing via netstat inside the load balancer container:

PS C:\> docker exec 3ef6e260f7e4 netstat -n Active Connections Proto Local Address Foreign Address State TCP 10.0.1.10:50021 10.0.1.8:7046 TIME_WAIT TCP 10.0.1.10:50524 10.0.1.8:7046 ESTABLISHED TCP 10.0.1.10:50558 10.0.1.11:7046 ESTABLISHED TCP 10.0.1.10:50567 10.0.1.8:80 TIME_WAIT TCP 10.0.1.10:50597 10.0.1.11:80 TIME_WAIT TCP 10.0.1.10:50609 10.0.1.11:80 TIME_WAIT TCP 10.0.1.10:50616 10.0.1.11:80 TIME_WAIT TCP 10.0.1.10:50617 10.0.1.11:80 TIME_WAIT

The incoming connections of the web- and Windows clients are forwarded to both instances we identified above. For the Windows client this is a simple socket which is kept open. For the webclient a cookie is set in the browser to forward all future requests to the same instance.

This is surely not a perfect solution but it allows us to load balance all client types of NAV. I’m pretty sure that the Windows support for other „swarm-aware“ load balancers will increase in the future and existing solutions will get better and hopefully also support TCP load balancing soon.

Update

As I haven’t explicitly mentioned yet: This setup also supports Windows authentication with gMSAs. After you created a gMSA (e.g. via Jakubs Skript) you just need to uncomment the credentialspec lines in the Docker stack file.